17-June-25

Infrasturtucre testing in ci/cd makesure to running smoothly provisioning using terraform work realy well as expected

Daily Quest #13: Infrastructure Testing

Infrasturtucre testing in ci/cd makesure to running smoothly provisioning using terraform work realy well as expected. Reference :

- https://terratest.gruntwork.io/

- https://github.com/hashicorp/setup-terraform

With terratest or kitchen-terraform you can write automate test with :

terraform init&applyin temporarry workspace- Verify resource exsist and right configuration

- Cleanup

destroyafter test complated

Realworld Usecase : You have terraform module to create bucket in s3 storage. Terratest will apply that module, check that bucket created, and destroy resources.

Skenario : Integrate terratest to github actions for validate live infrasturcture post-apply and pre-merge

makesure installing go first

- Create directory

test/and install go module ondtest/

mdkir test && cd test

go mod init ci_cd_demo_test

got get github.com/gruntwork-io/terratest/modules/terraform

Create text/terraform_hello_test.go

package test

import (

"testing"

"github.com/gruntwork-io/terratest/modules/terraform"

"github.com/stretchr/testify/assert"

"io/ioutil"

)

func TestHelloFile(t *testing.T) {

// retryable errors in terraform testing.

terraformOptions := terraform.WithDefaultRetryableErrors(t, &terraform.Options{

TerraformDir: "../",

})

defer terraform.Destroy(t, terraformOptions)

terraform.InitAndApply(t, terraformOptions)

content, err := ioutil.ReadFile("hello.txt")

assert.NoError(t, err)

assert.Contains(t, string(content), "Hello, OpenTofu!")

}

Create main.tf

terraform {

required_providers {

local = {

source = "hashicorp/local"

version = "~> 2.0"

}

}

required_version = ">= 1.0"

}

provider "local" {

# No configuration needed for local provider

}

resource "local_file" "hello_world" {

content = "Hello, OpenTofu!"

filename = "${path.module}/test/hello.txt"

}

Create workflow files .github/workflows/infra-test.yml

name: Infrastructure as Code (IaC) testing

on:

push:

jobs:

test-iac:

runs-on: ubuntu-latest

steps:

- name: Checkout code

uses: actions/checkout@v2

- name: Setup terraform

uses: hashicorp/setup-terraform@v3

with:

terraform_version: 1.7.3

- name: Setup terratest

uses: actions/setup-go@v5

with:

go-version: '1.24'

- name: Run terratest

working-directory: test

run: |

go test -v

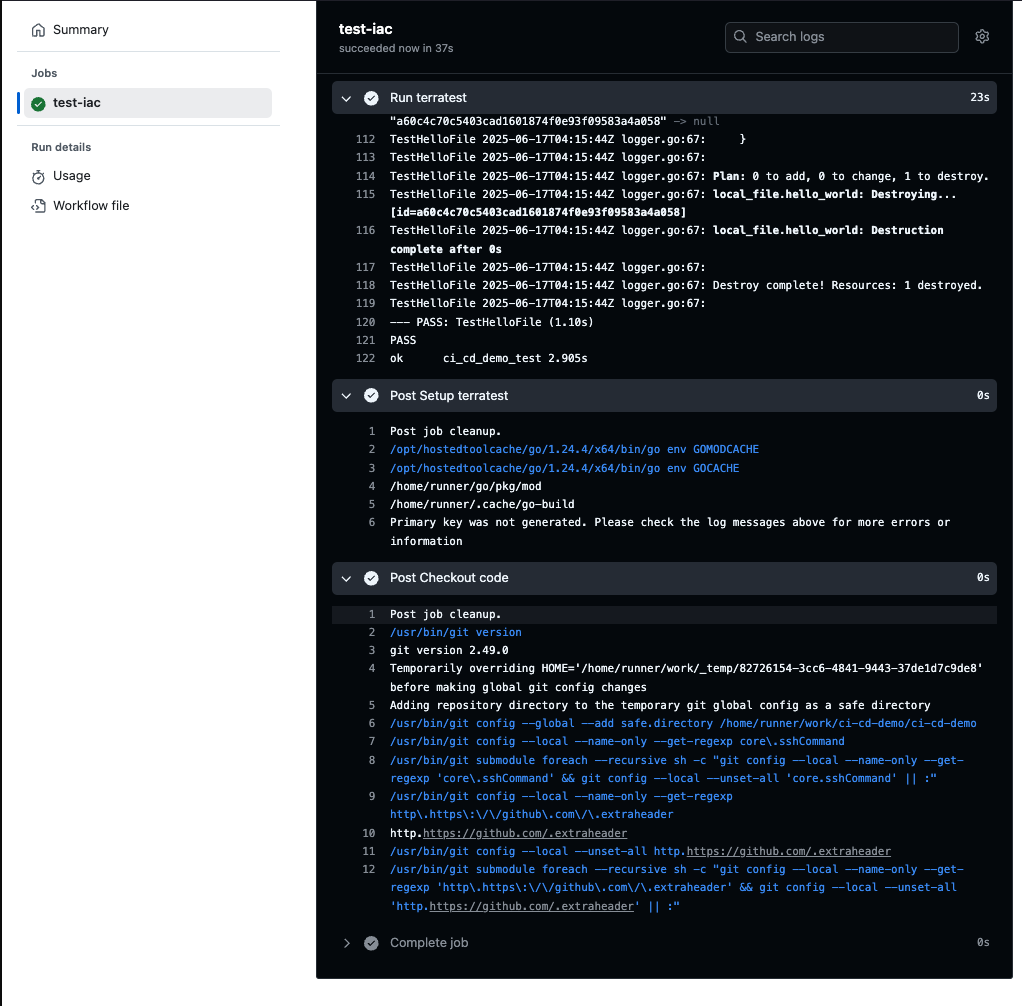

- Push & test passed

Answer :

- Instead using

teraform plan/validateusing terratest we can makes it easier to write automated tests for your infrastructure code. It provides a variety of helper functions and patterns for common infrastructure testing tasks - Kitchen terraform using

rubylanguage. (jelaskan)

Refleksi Jawaban

- Mengapa Terratest vs

terraform plan/validate?plan/validatehanya melakukan static pemeriksaan konfigurasi Terraform, tanpa menjalankan resource.- Terratest melakukan live

init&apply, lalu menjalankan pemeriksaan di runtime (misal membaca file, mengecek resource eksis), dan akhirnyadestroy. Ini menangkap bug yang hanya muncul saat provisioning nyata—misal kesalahan permission, path, atau ketergantungan environment.

- Kenapa/Bagaimana Kitchen-Terraform menggunakan Ruby?

- Kitchen-Terraform adalah plugin untuk Test Kitchen, framework testing infrastruktur berbasis Ruby.

- Kamu mendefinisikan

platforms,provisioner, danverifier(biasanya InSpec) di file.kitchen.yml. - Saat dijalankan, Test Kitchen (

kitchen converge) akan apply Terraform, lalu InSpec (kitchen verify) menjalankan tes compliance/functional yang ditulis dalam Ruby DSL. - Ini cocok jika kamu tim yang sudah familier dengan ekosistem Ruby/Test Kitchen atau butuh InSpec untuk security/compliance testing.

Daily Quest #14: Cleanup & Maintenance

Makesure environment clean, build, and resource runner eficient Reference :

- https://docs.github.com/en/actions/writing-workflows/choosing-when-your-workflow-runs/events-that-trigger-workflows#scheduled-events

- https://docs.docker.com/reference/cli/docker/system/prune/

- https://docs.github.com/en/actions/writing-workflows/choosing-what-your-workflow-does/storing-and-sharing-data-from-a-workflow#setting-retention-period

Real world case : Devops team runningself-hostedrunner only have 50GB disk, so in end-of-day, need to runpruneimage.

Skenario : Create workflows action to clean docker resource, volume and set artifact retention

- Create

cleanup-workflow.yml

name: Cleanup & maintenance

on:

push:

branches:

- main

schedule:

- cron: '0 0 * * *' # Every Sunday at midnight

jobs:

maintenance:

runs-on: ubuntu-latest

steps:

- name: Checkout repository

uses: actions/checkout@v4

- name: Cleanup old branches

run: |

docker system prune -a -f

docker volume prune -f

- name: Cleanuup old terraform state files

run: |

find ${{ github.workspace }}/test -name "*.tfstate" -mtime +7 -delete

- name: cleanup temporary workspace

run: |

rm -rf ${{ github.workspace }}/tmp/* || true

- name: Generate report

run: |

echo "Cleanup completed successfully on $(date)" > ${{ github.workspace }}/cleanup_report.txt

cat ${{ github.workspace }}/cleanup_report.txt

- name: Upload report

uses: actions/upload-artifact@v4

with:

name: cleanup-report

path: ${{ github.workspace }}/cleanup_report.txt

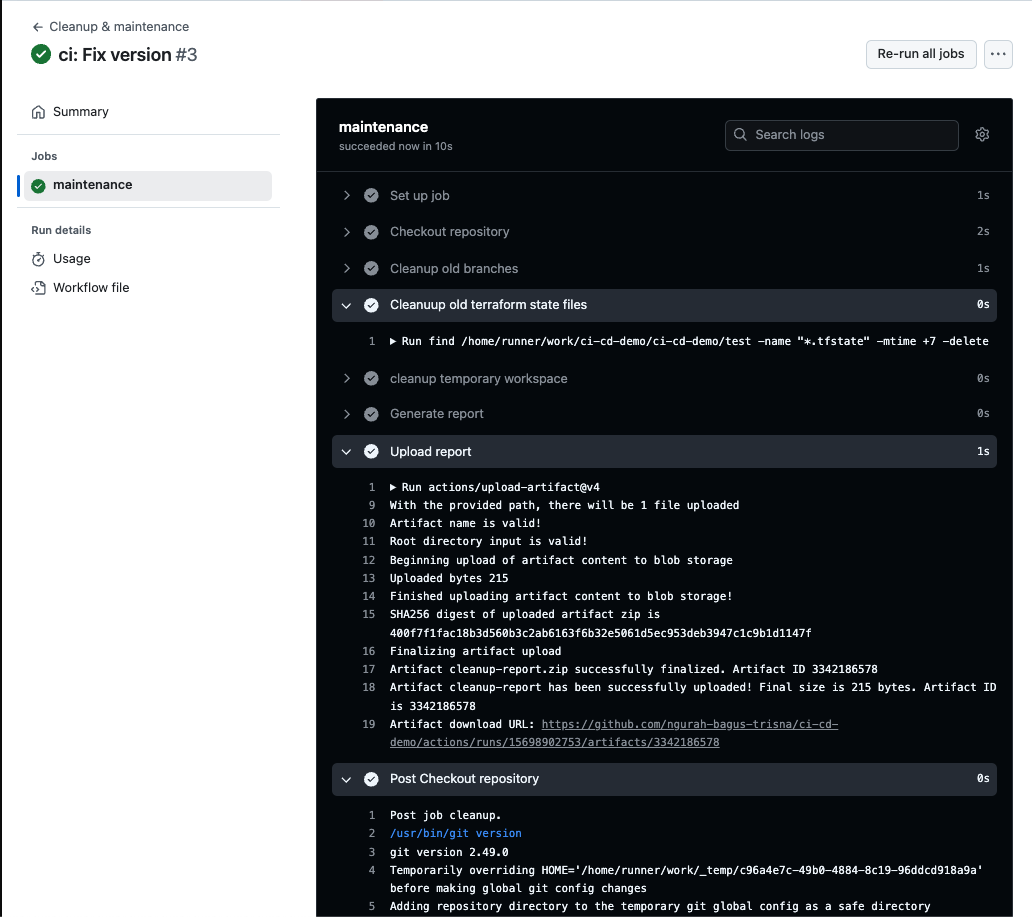

Workflow run every push to branch main & scheduled using cron every sunday midnight.

- Push & Result

Answer :

- Scheduling cleanup after off-peak hours is important to makesure not inffected production environment.

- Retention-days make efficient storage and audit because deleted unused old resources.

🧠 Refleksi Jawaban

- Mengapa schedule di off-peak hours penting?

Menjalankan cleanup saat traffic rendah (off-peak) mengurangi risiko mengganggu job produksi dan menghindari bottleneck I/O di runner. - Bagaimana

retention-daysbantu storage & audit?

Dengan membatasi umur artefak, kita mencegah penumpukan file usang—menghemat storage dan memudahkan audit karena hanya artefak relevan yang tersimpan.